Upcoming GVLab seminars

|

2025-12-5 15:00~16:00 Hybrid @UT Eng. Blg 2 TBD join online Neuromusculoskeletal Simulation of Human Posture and Gait Abstract: Understanding the robustness of human posture and gait—how we remain stable despite sensory noise, mechanical changes, or neurological impairment—is a central goal in motor control and biomechanics. Neuromusculoskeletal simulation provides a powerful framework for investigating this complex interplay between the nervous system and musculoskeletal dynamics. This talk presents our recent work investigating human motor control using body models with neural controllers. We examine quiet and perturbed stance, exploring how manipulating factors like sensory noise, muscle tone alters control strategies. This approach has been applied to characterize distinct compensation patterns in post-stroke and patients with Parkinson’s disease. We are also extending these simulations to 3D gait and linking model parameters with clinical and imaging data to bridge the gap between neural dysfunction and observable movement patterns. The talk will also cover practical aspects of Neuromusculoskeletal modeling. Biography: Kohei Kaminishi is a Project Lecturer at the Research into Artifacts, Center for Engineering (RACE) at The University of Tokyo. He holds Ph.D. (2019) in Engineering from The University of Tokyo. His research integrates human movement analysis, neuromusculoskeletal modeling, and clinical neuroscience. He utilizes computational modeling to explore the biomechanical and neural principles underlying human motor control, particularly balance and locomotion, and applies these insights to understand motor impairments in neurological populations. |

Past seminars

|

2025-10-15 15:00~16:00 Hybrid @UT Eng. Blg 2 TBD join online The Olfactory Turn in Social Robotics. Futuring of Human–Robot Sociality with ScentDia Abstract: One of the key challenges for a socially sustainable diffusion of social robots is how to design robotic agents that support human sociality while remaining clearly identifiable as “artificial others.” Addressing this challenge requires moving beyond anthropomorphic mimicry toward design strategies that emphasize compatible alterity. The paradigm “robots with us, not like us” (Damiano et al., 2025) provides a framework for conceiving and creating social robots as distinct social actors that can engage meaningfully with humans without confusing their identity with human identity. This perspective is crystallized in ScentDia, a social robot developed with robotic artist Mari Velonaki, its creator, and perfumer Manos Gerakinis. As the first robot to communicate through olfactory stimuli, ScentDia introduces the notion of an olfactory identity marker - a deliberately non-human, non-biological scent that asserts robotic difference while enabling interaction (Damiano et al., 2025) - thereby initiating an olfactory turn in social robotics. The talk will present the theoretical background of the ScentDia project, outline key elements of its design process, and draw on insights from its world premiere (21 February 2025, Museo Nazionale della Scienza e della Tecnologia “Leonardo da Vinci,” Milan). It will then discuss the broader implications of olfactory social robotics for future research on socially sustainable forms of human–robot interaction. Biography: Luisa Damiano is Full Professor of Logic and Philosophy of Science at IULM University (Milan), where she directs the PhD School in Communication Studies and co-directs CRiSiCo, the IULM Research Center on Complex Systems. She has published extensively in the epistemology of complex systems, the epistemology of cognitive sciences, and the epistemology of the sciences of the artificial, with a particular focus on synthetic biology and cognitive and social robotics. Her books include Living with Robots (Harvard University Press, 2017), published also in Italian, French, and Korean editions. |

|

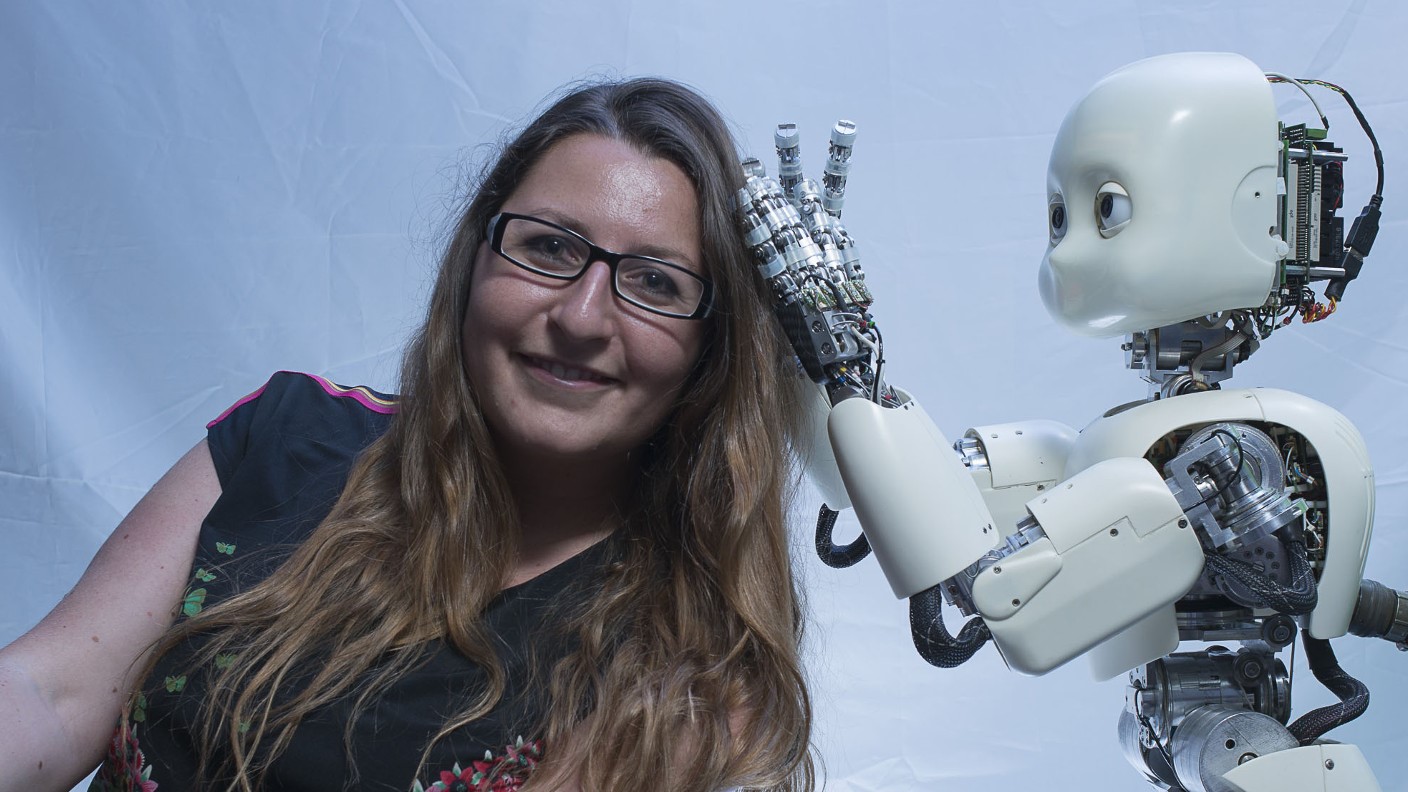

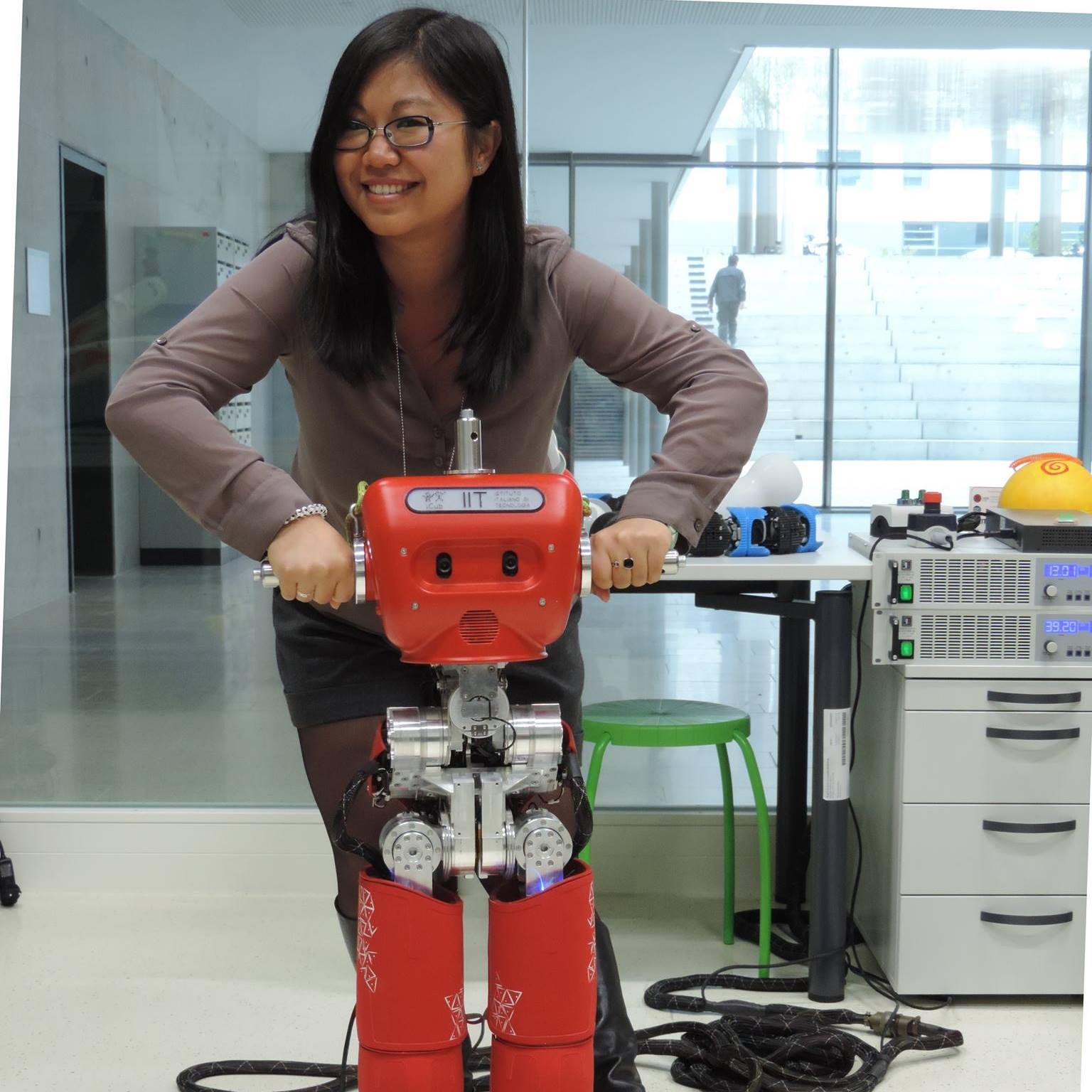

2025-9-16 15:00~16:00 Hybrid @UT Eng. Blg 2 room 231 join online Human-Centered Shared Control and Teleoperation for Physically Assistive Robots in Remote Care Prof. Yue Hu, Waterloo University, Canada Abstract: Collaborative robots are increasingly deployed in shared environments with humans, where meaningful interaction is essential for effectiveness and trust. Among these, teleoperated and semi-autonomous robots are increasingly considered for caregiving roles, particularly in aging-in-place scenarios where direct human presence may be limited. Effective deployment of these systems requires careful attention to human factors both for the remote caregiver and the care recipient. The research at the Active and Interactive Robotics Lab (AIRLab) focuses on bridging physical and social human-robot interaction (pHRI and sHRI) by integrating human state estimation into shared control frameworks. Through experimental studies, we examine how robot responsiveness, form, and expressive behavior influence user engagement, trust, and cognitive load, quantifying mental state using physiological data, task performance, and personality traits. We are also developing caregiver-facing teleoperation interfaces that aim to improve usability, reduce cognitive effort, and support more intuitive remote interaction. To complement this work, we analyze communication patterns in teleoperated care settings using traffic fingerprinting techniques, identifying potential privacy risks where user state or activity could be inferred from network-level data. Biography: Yue Hu is an Assistant Professor in Mechanical and Mechatronics Engineering at the University of Waterloo and director of the Active and Interactive Robotics Lab (AIRLab). She holds a PhD in Computer Science from Heidelberg University, with postdoctoral training at Heidelberg and the Italian Institute of Technology (IIT). Prior to joining Waterloo, she held academic and research positions in Japan, including as a JSPS fellow at AIST and Assistant Professor at Tokyo University of Agriculture and Technology. Her research bridges physical-social human-robot interaction, collaborative and humanoid robotics, and optimal control, with a recent interest in cybersecurity and privacy in robotic systems. She investigates how robots can interact safely and meaningfully with people while preserving users’ privacy and protecting against cyber threats. Yue is one of the co-chairs of the IEEE-RAS Technical Committee on Model-based Optimization for Robotics, an Associate Vice-President of the IEEE-RAS Members Activities Board (MAB), and an advisor for the organization Women in AI & Robotics. |

|

2025-8-28 14:00~15:00 Hybrid @UT Eng. Blg 2 106-107 join online From Failure Detection to Policy Debugging: Tracking Performance of Generative Imitation Policies Dr. Haruki Nishimura, Toyota Research Institute, USA Abstract: Modern generative models have enabled robots to acquire challenging dexterous skills directly from human demonstrations. Yet, even these advanced policies often suffer unexpected failures during deployment. Moreover, their overall performance can drift over time (either deteriorating or improving) due to inevitable distribution shifts. The black-box and stochastic nature of such policies makes it particularly difficult to identify when and how these fluctuations occur. In this talk, I will present TRI's recent research efforts to confront this challenge from multiple theoretical and algorithmic foundations: uncertainty quantification, modern statistical analysis, and data attribution. Together, these tools allow researchers and practitioners to detect failures in real time, rigorously verify performance fluctuations, and trace them back to specific training data. This not only enables timely recovery from performance drops but also provides an effective framework for systematic policy improvement. Biography: Dr. Haruki Nishimura is a Senior Research Scientist at Toyota Research Institute, and the research co-lead of the Trustworthy Learning under Uncertainty project in the Large Behavior Models Division. Haruki earned his Ph.D. at Stanford University. Haruki's current research focuses on rigorous statistical policy evaluation, failure detection and mitigation, and active learning, in the context of end-to-end dexterous manipulation with Large Behavior Models. He is a recipient of the Masason Foundation Fellowship, JASSO Overseas Fellowship, and the Best Paper Award at L4DC 2023 as the project supervisor. |

|

2025-7-29 10:30~11:30 Hybrid @UT Eng. Blg 2 31A join online Whole body model predictive control and reinforcement learning to generate motion on legged robots Dr. Olivier Stasse, LAAS, France Abstract: For over 20 years, the Gepetto group has been at the forefront of developing and testing new paradigms for motion generation in legged robots. Through an optimization-based approach that integrates motion planning and control, we have made significant progress in this field. This talk will revisit our key findings to date and use them as a foundation to motivate our current research on combining whole-body model predictive control with reinforcement learning. Biography: Olivier Stasse is a Tenured Senior Research Scientist at the Laboratory of Analysis and Architecture of Systems, CNRS, Toulouse, France. He earned his Ph.D. in Intelligent Systems from Sorbonne University and a master on Operational Research from the same university. At LAAS, he leads the Gepetto group focusing on control, task and motion planning, perception and experimentation on humanoid robots. He was a funding member of the JRL laboratory (CNRS/AIST). He led the ROB4FAM a joint lab with Airbus from 2018 to 2024, and is participating to Dynamograde a joint lab between PAL Robotics France and LAAS. After being Associate Editor at IEEE Transactions Robotics, he is Senior Editor at RAL in Animaloids and Motion Planning. |

|

2025-6-27 16:00~17:00 Hybrid @UT Eng. Blg 2 31A join online Soft Robotics for Humanoids: Adaptive Feet and Joints for Agile Walking Dr. Irene Frizza, the University of Tokyo, Japan Abstract: What helps a humanoid robot walk more like a human, not just in shape, but in movement, balance, and adaptability? While many robots use rigid parts and precise control, human walking relies on softness, flexibility, and quick responses to changing conditions. This talk looks at how soft, adaptive parts can improve robot movement. The focus is on soft robotic feet, controlled by air pressure, that adjust their stiffness in real time, and how soft joints can reduce impact and improve stability. These features help robots move more naturally and handle uneven ground better. As robots enter daily life, it becomes important to ask: how can mechanical systems better reflect the adaptability of the human body? And what role could softness play in the future of robot design? Biography: Irene Frizza received her B.Sc. degree in Electronic Engineering (2016) and M.Sc. in Automation and Robotic Engineering (2019) from the University of Pisa, Italy. From 2020 to 2024, she was a researcher at the Joint Robotics Laboratory (JRL), National Institute of Advanced Industrial Science and Technology (AIST), in Tsukuba, Japan. She received Ph.D. degree from the University of Montpellier (France) in 2023. Currently, she is now a postdoctoral researcher with the Japan Society for the Promotion of Science (JSPS) at the University of Tokyo, Japan. Her research focuses on the design and control of soft robotic feet and joints, with the goal of improving adaptive locomotion in humanoid robots. |

|

2025-4-25 16:00~16:45 Hybrid @UT Eng. Blg 2 106-107 join online Co-Learning with Robots: Enhancing Human-Robot Communication through Interactive Interfaces Prof. Wafa Johal, University of Melbourne, Australia Abstract: As robots become more present in everyday environments, enabling natural and effective communication between humans and robots is key to successful collaboration. This talk explores the idea of co-learning—where humans and robots adapt to each other through shared interaction—and how it can support non-technical users in training and working with robots. I’ll present a series of projects that investigate how robots can learn from demonstration using intuitive interfaces like virtual and mixed reality, and how feedback and expressive cues can help users understand robot behavior and uncertainty. We’ll look at how gaze and other implicit signals support smoother collaboration, and how robots can communicate risk in ways that foster trust and informed decision-making. Finally, I’ll share work on educational robots designed to support learners in classroom settings. Biography: Wafa Johal is an Associate Professor at the University of Melbourne, where she co-leads the HCI research group and leads the Collaborative Human-Robot Interaction (CHRI) research team. Her research develops socially intelligent, assistive robots that interact naturally with humans, using social signals, cognitive reasoning, and expressive behaviour. Her interests span human-robot collaboration, learning from demonstration, tangible robots, haptics, shared-control for teleoperation and social robotics. Recent projects explore mixed reality for robot learning and the role of embodiment in decision-making, with applications in education, rehabilitation, and manufacturing. Wafa has helped organize major conferences, including serving as General Co-Chair for the 2025 ACM/IEEE HRI Conference. She is also an Associate Editor the International Journal of Child-Computer Interaction. More at wafa.johal.org and chri-lab.github.io. |

|

2025-4-30 14:00~15:00 Hybrid @UT Eng. Blg 2 31A join online Thermal Communication Between Human and Robot Prof. Yukiko Osawa, Keio University, Japan Abstract: The design of robots intended for physical interaction with humans inherently involves conflicting requirements, as the characteristics that ensure safety in mechanical systems often stand in opposition to those that facilitate human touch. Achieving a balance between these two aspects remains a significant challenge. In particular, while heat is considered beneficial for facilitating human touch—such as in the reproduction of warmth—it also poses risks as a potential source of mechanical failure. Our research poses the question: How can we reconcile human-centric design with safety in robot system? Addressing this question from the perspective of thermal control, the project aims to establish a human–robot interface technology that enables bidirectional interaction through a thermally driven sensing and control system, realized via a robot skin integrated with a thermal circulation mechanism.

Biography: Yukiko Osawa received her B.E. degree (2015) in system design engineering and the M.E. (2016) and Ph.D. degrees (2019) in integrated design engineering from Keio University, Yokohama, Japan. She was a Research Fellow of the Japan Society for the Promotion of Science (JSPS) from 2017 to 2019. From 2019 to 2021, she was a JSPS Overseas Research Fellow and worked as a postdoctoral researcher at CNRS, LIRMM in France, involved in IDH (Interactive Digital Humans) team. From 2021 to 2024, she worked at the National Institute of Advanced Industrial Science and Technology (AIST) in Tokyo, Japan. She currently works as a Senior Assistant Professor in the Department of Applied Physics and Physico-Informatics at Keio University, Yokohama, Japan. Her research interests include human interface, human-robot interaction, haptics, and thermal systems, and her current research focuses on developing controllable robot skin for physical human-robot interaction. |

|

2025-3-26 15:00~16:30 Hybrid @UT Eng. Blg 2 31A join online Care in the era of technologies and artificial intelligence Prof. Vanessa Nurock, Cote d'Azur University, France Abstract: Modern civilization has been undergoing a major technological revolution accelerated by AI. This revolution has ethical and political dimensions that make it similar in scope to the Enlightenment, yet potentially contrary in values. Blurring traditional binary oppositions such as ‘natural’ vs. ‘artificial,’ it opens up a horizon of unpredictability that threatens to compromise our capacity to manage risk and our desire for control. As we face this challenge, the task of philosophical ethics is to articulate an ethics of care and of heightened sensitivity to human relations that is commensurate with the needs of a hyperconnected world. Let us try to go beyond the fruitless divide between obtuse resistance vs. managerial technosolutionism to address serious theoretical and practical issues, asking what we should reasonably expect from the new technologies and AI and how we can design them ethically so that we can uphold the Enlightenment injunction: “Dare to think!” Biography: Vanessa Nurock is Professor of Philosophy at Université Côte d’Azur and Deputy Director of the Center of Research in History of Ideas (Centre de Recherches en Histoire des idées – CRHI). She is also the chairholder of the UNESCO EVA Chair on the Ethics of the liVing and the Artificial (https://univ-cotedazur.fr/the-unesco-chair-eva/chair-eva). Her work is situated at the intersection of ethical, political, and scientific issues, with a particular emphasis on questions of gender and education. She has worked on topics such as justice and care, animal ethics, nanotechnology, cybergenetics, and neuroethics. Her current research focuses on the ethical and political problems raised by Artificial Intelligence and Robotics. |

|

2025-2-28 13:00~14:00 Hybrid @UT Eng. Blg 2 31A join online Movement perception: Different perspectives Dr. Amel Achour-Benallegue, AIST, Japan Abstract: In this talk, the perception of movement is presented through two distinct lenses. The first examines the interplay between shape and motion in computer-generated animations, focusing on how disruptions to their coherence affect viewer impressions. The second investigates the perception of emotions expressed through movement, exploring responses to regular motion displays and responses to altered association of shapes and motions. These approaches contribute to deepen the understanding of movement perception in relation to form and emotional expression. Biography: Amel Achour-Benallegue is a postdoctoral researcher within the Cognition, Environment, and Communication Research Team at the Human Augmentation Research Center (AIST). She holds a Ph.D. in Cognitive Science and an engineering degree in computer science. In addition to her scientific background, she is an artist, having studied painting at the Fine Arts School in Algeria and completing a master’s degree in Art, Science, and Technology from the Institut Polytechnique de Grenoble in France. Her research spans facial expressions, emotion, movement perception, and multidisciplinary intersections of art, anthropology, philosophy, neuroscience, cognitive psychology, and sensing technology. |

|

2025-1-15 15:00~16:00 Hybrid @UT Eng. Blg 2 31A join online Contraction analysis of nonlinear dynamical systems -- a tutorial survey Jean-Jacques Slotine, Massachusetts Institute of Technology Abstract: It has been more than a quarter century since a paper by Lohmiller and Slotine introduced contraction analysis to the nonlinear dynamics and control community, outlining the role of differential analysis using state-dependent Riemannian metrics and its many potential applications. Research in this domain is now extremely active, and we will review basics as well as some recent work in our group on applications to machine learning and to non-autonomous partial differential equations. Biography: Jean-Jacques Slotine is Professor of Mechanical Engineering and Information Sciences, Professor of Brain and Cognitive Sciences, and Director of the Nonlinear Systems Laboratory. He received his Ph.D. from the Massachusetts Institute of Technology in 1983, at age 23. After working at Bell Labs in the computer research department, he joined the faculty at MIT in 1984. Professor Slotine teaches and conducts research in the areas of dynamical systems, robotics, control theory, computational neuroscience, and systems biology. One of the most cited researchers in systems science, he was a member of the French National Science Council from 1997 to 2002, a member of Singapore’s A*STAR SigN Advisory Board from 2007 to 2010, a Distinguished Faculty at Google AI from 2019 to 2023, and has been a member of the Scientific Advisory Board of the Italian Institute of Technology since 2010. |

|

2024-12-17 14:00~16:00 3 guests this time!!!! Hybrid @UT Eng. Blg 2 room 231 join online 4D-Printing for Bio-Inspired and Bio-Based Design Prof. Tiffany Cheng, Cornell University, USA Abstract: What if our buildings and products could be manufactured and operated the way biological systems grow and adapt? As an alternative to conventional construction and manufacturing, this talk introduces 4D-printing for leveraging biobased materials and bioinspired design principles for applications ranging from self-adjusting wearables and self-forming structures to self-regulating facades. Biography: Tiffany Cheng is an incoming Assistant Professor at Cornell University's Department of Design Tech, where she directs the MULTIMESO Lab. Her work examines biobased materials and bioinspired structures for smarter and more sustainable forms of making. Tiffany is currently based in Germany at the Institute for Computational Design and Construction (ICD), where she leads the Material Programming research group. Tiffany defended her PhD at the University of Stuttgart. Prior to that, Tiffany earned her Master in Design Studies (Technology) from Harvard University and her Bachelor of Architecture from the University of Southern California. |

|

Learning, Hierarchies, and Reduced Order Models Dr. Steve Heim, MIT, USA Abstract: With the advent of ever more powerful compute and learning pipelines that offer robust end-to-end performance, are hierarchical control frameworks with different levels of abstraction still useful? Hierarchical frameworks with reduced-order models (ROMs) have been commonplace in model-based control for robots, primarily to make long-horizon reasoning computationally tractable.I will discuss some of the other potential advantages of hierarchies, why we want ROMs and not simply latent spaces, and the importance of matching the ROM time-scale to each level of the hierarchy.In particular, will show some results in learning for legged robots ROMs with cyclic inductive bias, with both hand-designed and learned ROMs.Time permitting, I will also discuss using _viability measures_ as an estimate of the intuitive notion of “how confident/safe is this action”, and why this is only useful at the right level abstraction. Biography: Steve Heim is a research scientist at the Biomimetic Robotics Lab at MIT (USA).He is interested in understanding dynamics in natural systems, especially how and why animals (learn to) move the way they do.Previously, Steve was at the Max Planck Institute for Intelligent Systems (Germany), where he completed a postdoc with the Intelligent Control Systems Group, and his PhD with the Dynamic Locomotion Group.He obtained his MSc and BSc from ETHZ (Switzerland), with stays at Tohoku University (Japan), EPFL (Switzerland), and TU Delft (Netherlands). |

|

Designing Computing Systems for Robotics and Physically Embodied Deployments Prof. Sabrina M. Neuman, Boston University, USA Abstract:Emerging applications that interact heavily with the physical world (e.g., robotics, medical devices, the internet of things, augmented and virtual reality, and machine learning on edge devices) present critical challenges for modern computer architecture, including hard real-time constraints, strict power budgets, diverse deployment scenarios, and a critical need for safety, security, and reliability. Hardware acceleration can provide high-performance and energy-efficient computation, but design requirements are shaped by the physical characteristics of the target electrical, biological, or mechanical deployment; external operating conditions; application performance demands; and the constraints of the size, weight, area, and power allocated to onboard computing-- leading to a combinatorial explosion of the computing system design space. To tame this complex design space, our approach is to identify common computational patterns shaped by the physical characteristics of the deployment scenario (e.g., geometric constraints, timescales, physics, biometrics), and distill this real-world information into systematic design flows that span the software-hardware system stack, from applications down to circuits. In this talk, we will describe our recent work in designing computing systems for robotics, and outline steps towards a future of systematic co-design of computing systems with the real world. Biography: Sabrina M. Neuman is an Assistant Professor of Computer Science at Boston University. Her research interests are in computer architecture design informed by explicit application-level and domain-specific insights. She is particularly focused on robotics applications because of their heavy computational demands and potential to improve the well-being of individuals in society. She received her S.B., M.Eng., and Ph.D. from MIT, and she was a postdoctoral NSF Computing Innovation Fellow at Harvard University. She is a 2021 EECS Rising Star, and her work on robotics acceleration has received Honorable Mention in IEEE Micro Top Picks 2022 and IEEE Micro Top Picks 2023. She holds the 2023-2026 Boston University Innovation Career Development Professorship. |

|

2024-11-20 11:00~12:00 Hybrid @UT Eng. Blg 2 room 31A join online Human state estimation using various sensors and modalities Prof. Mariko Isogawa, Keio University, Japan Abstract: When estimating the scene state in a real-world environment, we need to consider various aspects such as the impact on estimation accuracy due to lighting conditions or occlusions, the resource cost such as memory and power consumption, and the privacy of personal information in captured data. On the other hand, even if there are constraints that are difficult to solve with a certain measurement, it may be possible to partially resolve them by using other measurements or modalities. This talk discusses the merits and demerits of various measurement methods using visible light sensors such as event cameras and transient cameras, as well as modalities other than visible light such as wireless signals and acoustic signals. I will also discuss the challenges and prospects when dealing with these methods for human state estimation tasks such as human posture estimation and 3D shape reconstruction, based on the latest research trends. Biography: Mariko Isogawa received her M.S., and Ph.D. degrees from Osaka University, Japan, in 2013, and 2019, respectively. From 2013 to 2022, she worked at Nippon Telegraph and Telephone Corporation as a Researcher. She was a Visiting Scholar at Carnegie Mellon University from 2019 to 2020. Since 2022, she has been affiliated with Keio University. She is currently an Associate Professor in the Department of Information and Computer Science, Faculty of Science and Technology, Keio University. Her research interests include computer vision such as scene and human state estimation with various modalities. |

|

2024-10-24 10:30~11:30 Hybrid @UT Eng. Blg 2 room 323 join online The intelligence of the hand Prof. Lorenzo Jamone, Queen Mary University London, UK Abstract: The robots of today are mainly employed in heavy manufacturing industries (e.g. automotive) to perform simple and repetitive tasks in very structured environments. The robots of the future will be different. They will perform more complex tasks in less structured environments, even in collaboration with humans. To do so, they will need to use their hands (almost) as smartly as humans do, which is a tremendous challenge! How will this be achieved? Explicit insights from biology and psychology, well established control and engineering principles, modern AI techniques, have to be combined and properly integrated. In the talk I will briefly summarize my 20-years research journey in the area of Cognitive Robotics (with the twofold objective of taking inspiration from humans to realize better robotic systems, and at the same time understanding more about human intelligence), with a focus on "the intelligence of the hand": tactile exploration and manipulation of objects, but also communication and interaction, in both humans and robots. Biography: Lorenzo Jamone is a Senior Lecturer (Associate Professor) in Robotics at the School of Engineering and Materials Science of the Queen Mary University of London (UK). He is part of ARQ (Advanced Robotics at Queen Mary) and he is the founder and director of the CRISP group: Cognitive Robotics and Intelligent Systems for the People. He is the Chair of the IEEE Technical Committee of Cognitive and Developmental Systems. He received the MS degree (honours) in computer engineering from the University of Genoa, Genoa, Italy, in 2006, and the PhD degree in humanoid technologies from the University of Genoa (Italy), and the Italian Institute of Technology, in 2010. He was an Associate Researcher at the Takanishi Laboratory, Waseda University (Tokyo, Japan) from 2010 to 2012, and at the Computer and Robot Vision Laboratory, Instituto Superior Técnico (Lisbon, Portugal) from 2012 to 2016. He has over 100 publications with an H-index of 29. His research interests include cognitive robotics, robot learning, robotic manipulation, tactile sensing.

|

2024-8-29 15:00~16:00 Hybrid @UT Eng. Blg 2 room 106-107 (GVLab) join online Motion feasibility in multi-contact locomotion Milutin Nikolić, University of Novi Sad, Serbia Abstract: Bipedal walking is an ability humans naturally have, but it is very hard for robots. The main reason is that a standing robot is underactuated and inherently unstable. Special attention has to be given to contact forces acting on the robot’s feet. The contact between the feet and the ground has to be constantly maintained to ensure stable walking. The first indicator of walking stability is ZMP introduced some 55 years ago. With the increasing capabilities of humanoid robots, we need to tackle the cases not covered by ZMP, i.e. locomotion with multiple non-coplanar contacts and contacts between the hands and the environment. The presentation shows how contact configuration defines feasible contact forces, thus defining feasible CoM motion. For several characteristic cases, we will compare the new criteria against ZMP. Finally, we will prove that contact configurations that allow arbitrary CoM accelerations exist. Biography: Milutin Nikolić received his M.Sc in Mechatronics and a Ph.D. degree in Robotics from the University of Novi Sad, Serbia in 2008 and 2015 respectively. He currently works as an Associate professor of Robotics at the University of Novi Sad, Faculty of Technical Sciences, Chair of Mechatronics, Robotics, and Automation. His field of research includes whole-body motion synthesis, walking pattern generation, contact stability criteria, multi-body system dynamics and robot manipulation and grasping. He spent 18 months at Nakamura-Yamamoto lab, the University of Tokyo in Japan, as a Project assistant professor working on human motion capturing and analysis. Nikolić was also working as a research associate at NTU Singapore, TU Technikum Wien, and UMIT, Hall in Tyrol. Nikolić has industry experience, working as a principal robotic arm manipulation engineer at the Aeolus robotics, where he developed novel approaches in arm calibration and whole-body motion planning. |

|

|

2024-7-10 15:00~16:00 Hybrid @UT Eng. Blg 2 room 31A join online Artificial Intelligence: Applied Innovation & Ethics Jeff Lui, PhD, CPA, MBA Abstract: Artificial Intelligence (AI) has recently provided new opportunities for researchers and practitioners to leverage data for innovative applications in almost every sector. As AI becomes increasingly invasive in mainstream activities, our machines will need more empathy, emotion, and ethics. In this seminar, Jeff will discuss his innovative work in Affective Computing, how to successfully deploy empathic machines and a call for an elevated role of Ethics in AI. The seminar will conclude with a look into the future and how interdisciplinary dialogue is essential for future harmony between humans and machines. Biography: Jeff Lui is a Canadian Entrepreneur, Innovator, and Academic. His work centers around Artificial Intelligence, Computer Vision, and Applied Ethics. Previously, Jeff worked at Google, helping the company design innovative people AI programs to improve employee happiness. He was also the Director of AI at Deloitte, where he spearheaded the firm’s new global AI Practice, and has consulted with dozens of Fortune 500 clients, including Formula 1, Microsoft, and the United Nations. Jeff continues to drive innovation by designing creative intelligence through new entrepreneurial ventures. |

|

2024-6-13 15:30~16:30 Hybrid @UT Eng. Blg 2 room 31A join online From Loops to Leaps: Feedback Control in Robotics - Hurdles, Rewards, and Sacrifices Dr. Mehdi Benallegue, AIST, Japan Abstract: The traditional model-based motion generation pipeline consisting of "planning, task control, stiff tracking" is meeting strong limitations, especially with regard to dynamic tasks such as bipedal locomotion. Most of the issues lie in the lack of accuracy of the simplified models in the presence of disturbances and dynamic motions. In this presentation I will discuss a very classic way to address these issues: use state and measurement feedback at a higher level of the control pipeline. Through three examples, I will present the difficulty of the problem, the benefits of this procedure together with an idea of the paradigm change that it requires.. Biography: Dr. Mehdi Benallegue holds an engineering degree from the National Institute of Computer Science (INI) in Algeria, obtained in 2007. He earned a master's degree from the University of Paris 7, France, in 2008, and a Ph.D. from the University of Montpellier 2, France, in 2011. His research took him to the Franco-Japanese Robotics Laboratory in Tsukuba, Japan, and to INRIA Grenoble, France. He also worked as a postdoctoral researcher at the Collège de France and at LAAS CNRS in Toulouse, France. Currently, he is a Research Associate with CNRS AIST Joint robotics Laboratory in Tsukuba, Japan. His research interests include robot estimation and control, legged locomotion, biomechanics, neuroscience, and computational geometry. |

|

2024-4-25 14:00~15:00 Hybrid @UT Eng. Blg 2 room TBD join online Dynamics Gradient Calculation and Fast Dynamical Simulation of Flexible Rods Dr. Taiki Ishigaki, The University of Tokyo, Japan Abstract: Flexible rods are used to achieve dynamic motion in sports, such as sports prostheses and golf club shafts. In the field of soft robotics, research is being conducted to realize dynamic motion using the elastic component of flexible structures, and its simulation techniques are also important. In this talk, the modeling and dynamics calculation method for flexible rods, and the dynamics gradient calculation method which extends the algorithm for rigid-linked systems proposed by the presenter will be introduced, and fast forward dynamics simulations will be presented. Models integrating flexible structures and rigid link systems and their applications will also be presented. Biography: Dr. Taiki Ishigaki recieved Ph.D. from the Department of Mechano-Informatics, The University of Tokyo in 2024. His research focuses on the dynamic calculation, simulation and control of robots including rigid and soft structures, espesially, humanoid robots; he is also interested in its application to human motion analysis. |

|

2024-4-11 16:00~17:00 Hybrid @UT Eng. Blg 2 room 31A join online Nao in the Wild: Sensitive groups and other challenges Paulina Zguda, Jagiellonian University, Poland Abstract: What is the aim of the HRI environment - to create robots that belong exclusively in the laboratory, or those that are meant to assist people in the environments they frequent? In the field of social robotics, there is growing interest in the in-the-wild approach, which involves enabling people to interact with robots in familiar spaces and using familiar activities. In the forthcoming presentation, I will present several studies conducted with my team from the Jagiellonian University involving two particularly sensitive groups of participants - children and older adults. Our approach aims to identify and address the challenges facing social robotics that extend beyond the boundaries of the laboratory. Additionally, I will describe several socially significant factors that people may pay attention to during interaction with robots. Biography: Paulina Zguda is a cognitive scientist and currently a PhD candidate in philosophy at the Doctoral School in Humanities at Jagiellonian University in Krakow, Poland. Her main research interest is how people react to social robots, and in particular, how their aesthetics and behaviour determine people’s perceptions of these artificial agents. |

|

2024-3-7 16:00~17:00 Hybrid @UT Eng. Blg 2 room 31B join online Modifying Social and Spatial boundaries through Human-Robot Interactions Dr. Anne-Lise Jouen, University of Burgundy, France Abstract: Interactions between humans and robots can be utilized across various contexts such as healthcare, education, and rehabilitation. This presentation will explore two distinct perspectives on Human-Robot Interaction (HRI). First, we will introduce our work on social interactions with robots (human-human-robot interactions), derived from a participatory science project conducted in a nursing home (EPHAD), aimed at enhancing interactions among the elderly through the use of a robotic narrative assistant. This preliminary study revealed a gradual increase in interactions among the elderly, along with a significant interest in robotic technologies. The second aspect of this presentation will focus on a very different facet of HRI with a more neuroscientific perspective: robotic embodiment (i.e., being placed in the position of a robot, seeing through its eyes). This will delve into the impact of robotic embodiment on our spatial representations. Through several studies involving robotic telepresence and VR embodiment, we have demonstrated the substantial impact of these technologies on our perceptions of our bodies and space. These diverse studies will be discussed within the broader context of evaluating the impact of emerging technologies using reliable metrics, including neuroscientific and psychometric measurements. Biography: Dr. Anne-Lise Jouen obtained a PhD in Neuroscience from the University of Lyon, specializing in neuroimaging. Although initially focused on language and speech, she harbors a deep passion for new technologies, particularly robotics, having completed her doctorate at a robotics laboratory (Dr. Peter Dominey's Robot Cognition Lab). Throughout her various postdoctoral positions (at the University of Paris, Tokyo, and Geneva), she has endeavored to develop an approach combining neuroscientific and psychometric measures to objectively assess the impact of new technologies in the fields of education and rehabilitation. Her recent work at the University of Burgundy has led her to explore the complex notion of the Self and the impact of robotic and VR technologies on our spatial and bodily representations. |

|

2024-2-24 14:00~15:00 Hybrid @UT Eng. Blg 2 room 231 join online Exploring Child-robot Interaction: Insights, Roles, and Challenges Prof. Shruti Chandra, Specially Appointed Assistant Professor, Tokyo Institute of Technology, Japan. Research Fellow, University of Waterloo, Canada. Upcoming Assistant Professor, University of Northern British Columbia, Canada. Abstract: While we may soon have AI-based artists or scientists, we are nowhere near autonomous robot plumbers. The human brain still largely outperforms robotic algorithms in most tasks, using computational elements 7 orders of magnitude slower than their artificial counterparts. Similarly, current large scale machine learning algorithms require millions of examples and close proximity to power plants, compared to the brain's few examples and 20W consumption. We study how modern nonlinear systems tools, such as contraction analysis, virtual dynamical systems, and adaptive nonlinear control can yield quantifiable insights about collective computation and learning in large physical systems and dynamical networks. For instance, we show how stable implicit sparse regularization can be exploited online in adaptive prediction or control to select relevant dynamic models out of plausible physically-based candidates, and how most elementary results on gradient descent and optimization based on convexity can be replaced by much more general results based on Riemannian contraction. Biography: Dr. Shruti Chandra holds a Joint PhD degree in Electrical and Computer Engineering from École Polytechnique Fédérale de Lausanne, Switzerland and Instituto Superior Técnico, Portugal. Her work focuses on using interactive technologies such as social robots and screen-based interfaces to support people’s well-being, emphasising real-world applications. Her research is deeply rooted in three essential domains: human-centred design, autonomous and interactive systems, and the dynamics of social interactions. |

|

2024-1-24 10:00~11:30 Hybrid @UT Eng. Blg 2 room 232 join online Stable adaptation and learning Jean-Jacques Slotine, Massachusetts Institute of Technology Abstract: While we may soon have AI-based artists or scientists, we are nowhere near autonomous robot plumbers. The human brain still largely outperforms robotic algorithms in most tasks, using computational elements 7 orders of magnitude slower than their artificial counterparts. Similarly, current large scale machine learning algorithms require millions of examples and close proximity to power plants, compared to the brain's few examples and 20W consumption. We study how modern nonlinear systems tools, such as contraction analysis, virtual dynamical systems, and adaptive nonlinear control can yield quantifiable insights about collective computation and learning in large physical systems and dynamical networks. For instance, we show how stable implicit sparse regularization can be exploited online in adaptive prediction or control to select relevant dynamic models out of plausible physically-based candidates, and how most elementary results on gradient descent and optimization based on convexity can be replaced by much more general results based on Riemannian contraction. Biography: Jean-Jacques Slotine is Professor of Mechanical Engineering and Information Sciences, Professor of Brain and Cognitive Sciences, and Director of the Nonlinear Systems Laboratory. He received his Ph.D. from the Massachusetts Institute of Technology in 1983, at age 23. After working at Bell Labs in the computer research department, he joined the faculty at MIT in 1984. Professor Slotine teaches and conducts research in the areas of dynamical systems, robotics, control theory, computational neuroscience, and systems biology. One of the most cited researchers in systems science, he was a member of the French National Science Council from 1997 to 2002, a member of Singapore’s A*STAR SigN Advisory Board from 2007 to 2010, a Distinguished Faculty at Google AI from 2019 to 2023, and has been a member of the Scientific Advisory Board of the Italian Institute of Technology since 2010. |

|

2023-12-21 13:00~14:00 Hybrid @UT Eng. Bld 2 room 31A join online 「高齢者・障害者の健康・医療・福祉機器開発研究」

田中敏明, 北海道科学大学・東京大学先端科学技術研究センター/高齢社会総合研究機構 Abstract: 約30年以上にわたり高齢者および障害者・患者のためのリハビリテーション科学および生活支援のための人間工学および福祉工学と関連させながら研究を遂行してきた。この間、一貫して、臨床現場において高齢化に伴う運動・感覚・脳機能障害を福祉工学の観点から支援する手法と技術の研究を実施してきた。 具体的な研究としては、「高齢者のための感覚フィードバック型バランストレーニング研究」があり、立位バランス検査トレーニング用医療機器を産官学連携のもとで特許実用化し、製品化への道を拓き臨床で使用されている。次に、「高次脳機能障害における空間無視者の日常生活評価訓練システムの研究」があり、これは、視空間の認知障害を有する患者は歩行や車椅子操作に障害を来すためこのバリアーを解消するうえでバーチャルリアリティ(VR)における3次元視覚ディスプレイを利用して空間無視の症状を解析するとともに、リハビリ手法を開発した。これらの研究は、「遠隔リハビリテーションシステム(総務省SCOPE)」や認知症患者のための「車椅子操作注意喚起システム開発研究」へ発展している。その他に「産・官・学連携研究」の成果として、NEDO等による支援を受けて凍結路面用ワンタッチ式杖、腰痛予防用除雪器具、農作業軽労化用具など多くの福祉用具の実用化・製品化に地域貢献している。 本講演では、転倒予防リハトレーニング機器、バーチャルリアリティ技術を用いたリハビリテーション、その他、産官学連携研究等で製品化を目指している医療福祉機器に関して講演する。 Biography: 田中 敏明 (タナカ トシアキ) 理学療法士、博士(工学)、

人間工学専門家 |

|

2023-10-19 15:00~16:00 Hybrid @UT room Eng. 2 room join online Human augmentation at Kyocera's Future Design Laboratory Dr. Jacqueline Urakami, Kyocera's Future Design Research Laboratory, Japan Abstract: At Kyocera's Future Design Research Laboratory situated in Yokohama, we are advancing R&D for human augmentation technologies, with the aim of achieving a seamless integration between "humans" and "technology." Human augmentation is an interdisciplinary field that encompasses various methods, technologies, and applications designed to harmoniously merge the distinctive capabilities of both individuals and machines. In this presentation, we will provide an introduction to three innovative systems that have been developed within our Laboratory: 1) A walk sensing and coaching system, designed to enhance proper walking posture and technique. 2) A physical avatar, dedicated to mitigating the challenges of isolation during remote work. This avatar facilitates smooth communication akin to in-office interactions, thereby fostering effective communication among employees and teams dispersed across different locations. 3) Perception and cognition augmentation, culminating in a device capable of capturing and reproducing audio conversations missed during initial encounters. Furthermore, the presentation will delve into the intricate challenges associated with social signal processing for human augmentation, particularly when applied to real-world scenarios. Biography: In 2022, Jacqueline Urakami became a researcher at Kyocera’s Future Design Research Laboratory. Her primary area lies in the field of Human Augmentation, with a specific focus on exploring the impact of human augmentation on human performance. Jacqueline Urakami received a Master's degree in Psychology from Dresden University (Germany) in 1998 and completed a Ph.D. in Psychology from Chemnitz University of Technology (Germany) in 2002. Following the completion of her doctoral studies, she spent two years as a Humboldt Fellow at Keio University's Shonan Fujisawa Campus, where she conducted research in interface design utilizing eye tracking technology. Throughout her career, Dr. Urakami has amassed valuable experience in Japan, including teaching roles at Keio University and a position as a Professor at the Tokyo Institute of Technology. Her research endeavors have been predominantly concentrated in the domains of Human-Computer Interaction and Human-Robot Interaction. |

|

|

|

2023-8-22 11:00~12:00 Hybrid @UT room Eng. 2 room 233 join online Challenges in Social Robotics: Long-term Interaction, Personalization and Trust Prof. Adriana Tapus, ENSTA Paris, Institut Polytechnique de Paris, France Abstract: Social robots are more and more part of our daily lives, and the design of their behaviors greatly affects the way people interact with them. To ensure optimal engagement, long-term adaptation and personalized robot's behavior to the user's specific needs and profile should be envisaged. One important social construct to consider is humor. Humor has been shown to reduce communication barriers and enhance interpersonal relationships. Developing a robot with the ability to express various forms of humor can enhance its naturalness and effectiveness in human-robot interactions. This presentation will explore innovative perception and interaction capabilities and address the challenges raised by inter-individual differences and intra-individual variability over time. Biography: Adriana TAPUS is Full Professor in the Autonomous Systems and Robotics Lab in the Computer Science and System Engineering Department (U2IS), at ENSTA Paris, Institut Polytechnique de Paris, France. Since 2019, she is the Director of the Doctoral School of the Institut Polytechnique de Paris (IP Paris). Prof. Tapus serves as one of the member of the Women in Science and Engineering Committee at IP Paris. In 2011, she obtained the French Habilitation (HDR) for her thesis entitled “Towards Personalized Human-Robot Interaction”. She received her PhD in Computer Science from Swiss Federal Institute of Technology Lausanne (EPFL), Switzerland in 2005. She worked as an Associate Researcher at the University of Southern California (USC), where she was among the pioneers on the development of socially assistive robotics, also participating to activity in machine learning, human sensing, and human-robot interaction. Her main interests are on long-term learning (i.e. in particular in interaction with humans), human modeling, and on-line robot behavior adaptation to external environmental factors. She worked on various applications going from socially assistive applications for helping people with physical and cognitive impairments (e.g., children with autism, the elderly, people suffering of sleep disorders, people in rehabilitation after a stroke) to autonomous vehicles. Prof. Tapus is a Senior Editor of International Journal on Robotics Research (IJRR), an Associate Editor for International Journal of Social Robotics (IJSR), an Associate Editor for ACM Transactions on Human-Robot Interaction (THRI), and Associate Editor for Frontiers in Robotics and AI. She is member of the program and steering committee of several major robotics conferences (e.g., General Chair 2019 of HRI, Program Chair 2018 of HRI, General Chair 2017 of ECMR). Prof. Tapus was the Keynote Speaker at several workshops and conferences. She has more than 200 research publications. She was elected in 2016 as one of the 25 women in robotics you need to know about. Prof. Tapus received the Romanian Academy Award for her contributions in assistive robotics in 2010 and in 2022 she was awarded by the French Prime Minister the Knight of the Academic Palms. She is member of IEEE, AAAI and ACM. She is also the coordinator and member of multiple EU and French National research grants (e.g., EU ENRICHME, EU RAICAM, Bots4Education). Further details about her research and activities can be found at https://perso.ensta-paris.fr/~tapus/eng/index.html |

|

2023-7-25 11:00~12:00 Hybrid @UT or join online Intersectionality and AI: Gender, Age, and Everything in Between Prof. Katie Seaborn, Tokyo Institute of Technology, Japan Abstract: People are diverse. People create machines. People may or may not embed human diversity within those machines. Even when they do, they may not realize it or foretell the implications. In this talk, I will introduce the notion of intersectionality and fundamental "sections" and "intersections," especially gender and age, and how these relate to research and practice in robotics and AI. I will cover case studies from my own work on humanoid robots, translation in natural language processing, and computer voice. Biography: : Prof. Katie Seaborn is an Associate Professor in the Department of Industrial Engineering and Economics at Tokyo Institute of Technology. Since 2020, she has led the Aspirational Computing Lab at Tokyo Tech. She currently holds the positions of Visiting Researcher at the RIKEN Center for Advanced Intelligence Project (AIP) and Honorary Researcher at the UCL Interaction Centre (UCLIC). She previously worked as a Postdoctoral Researcher at RIKEN AIP, Co-operative Research Fellow at the University of Tokyo (2019-20), JSPS Postdoctoral Fellow at the University of Tokyo (2018-19), and Research Fellow at UCLIC (2017-18). She received her Ph.D. in Human Factors from the Department of Mechanical & Industrial Engineering (MIE) and graduated from the Collaborative Program in Knowledge Media Design (KMDI) at the University of Toronto (2016). Her research interests include interaction design with voice-based agents, critical computing perspectives on robots and gender, inclusive design with older adults, and technologies for psychological well-being. Her seminal work on gamification has been cited over 2700 times. |

|

2023-6-27 14:00~15:00 Hybrid @UT room Eng. 2 room 31A or join online Modularity in Aerial Robotics and its Applications Prof. Moju Zhao, the University of Tokyo, Japan Abstract: During the last decade, research on aerial robots has become significantly active. Among the developments of the platform, several modular designs have been proposed to offer the reconfigurable capability for advanced maneuvering in midair. In this talk, we will present the development of our original modular aerial robots, which involves the methodology of modular design, modelling and control, and motion planning. Furthermore, the unique aerial application, such as snake-like maneuvering and manipulation in midair will be also introduced. Biography: Prof. Moju Zhao is currently an Assistant Professor at The University of Tokyo. He received Doctor Degree from the Department of Mechano-Informatics, The University of Tokyo, 2018. His research interests are mechanical design, modelling and control, motion planning, and vision based recognition in aerial robotics. His main achievement is the articulated aerial robots which have received several awards in conference and journal, including the Best Paper Award in IEEE ICRA 2018. |

|

2023-5-16 11:00~12:00 Hybrid @UT or online At the frontiers of biomechanics and robotics Bruno Watier, LAAS, France Abstract: This presentation will be an opportunity to give an overview of current projects at LAAS-CNRS at the interface of robotics and biomechanics. We will see achievements in the field of gait simulation, exoskeleton design or human-robot interaction. Biography: Bruno Watier is full-time professor. He joined JRL as CNRS delegate in 2023. Since 1999, he has been Associate Professor at Universite de Toulouse 3 (UT3), LAAS-CNRS laboratory, France, in the Gepetto team. He received the Ph.D. degree in mechanics from ENSAM in 1997 and the Habilitation degree from UT3 in 2015. His research focuses on human movement analalysis, motor control and human-robot interaction. He organized several conferences and workshops related to biomechanics. Bruno Watier currently leads 4 french national ANR project. He is president of the Société de Biomécanique and leads a major degree in sport performance. |

|

2023-4-18 11:00~12:00 Hybrid @UT Blg 2 room 223 or online A Medical Robot for Physical-HRI based Nasopharyngeal Swab Sampling Prof. Tianwei Zhang, Chinese University of Hongkong, China Abstract: In these three years, nasopharyngeal (NP) swab sampling and reverse-transcription Polymerase Chain Reaction (RT-PCR) test techniques have proven to be very effective and reliable tests for the early detection of COVID-19 infected individuals. However, this highly reliable RT-PCR testing is difficult to operate and must be operated by a dedicated swab and trained medical personnel. In this work, we present a robot that operates nasopharyngeal swabs for RT-PCR sampling. We designed new hardware system and developed advanced 3D vision and force sensing hybrid perception algorithms for the robot to accurately locate the nostril position, manipulate the swab into the nostril and then carefully advance the swab along the inferior nasal tract to the designated sampling position in the posterior nasal cavity. The experimental results of more than 8,000 volunteer experiments indicates the proposed robot PCR sampling results are as accurate as human. Questionnaires show that our robot NP swab sampling is more comfortable than manual sampling. Biography: Prof. Tianwei Zhang is currently an Associate Research Scientist at Shenzhen Institute of Artificial Intelligence and Robotics for Society, Research Assistant Professor in the Chinese University of Hongkong, Shenzhen. He received Doctor Degree from the Department of Mechano-Informatics, The University of Tokyo, 2019. His research interests are visual manipulation and dynamic SLAM. He has published several articles in ICRA/IROS/RAL. |

2023-2-16 16:00~17:00 Hybrid @UT or online Adaptive Dialogue Strategy for Teachable Social Robots Rachel Love, Monash University, Australia Abstract: Social robots have great potential when used in an educational setting, with the ability to deliver personalised, one-on-one interactions for students. Robots that take on the role of the student, while the student takes on the role of teacher, have the ability to improve learning outcomes through increased engagement in, and responsibility for the teaching task. Social robots may also benefit from adapting their behaviours to meet the needs and preferences of individual users. The research presented in this talk uses a reinforcement learning approach to adapt the dialogue choices that the teachable robot makes at different points in the teaching conversation. This teaching interaction uses the Curiosity Notebook, a flexible online teaching platform that helps students learn about a simple classification task. The aim of this research is to improve the learning outcomes and engagement of the student, through an adaptive, personalised approach. This talk will discuss the motivations and findings of this research. Biography: Rachel is a third year PhD candidate in the Robotics lab within the department of Electrical and Computer systems Engineering at Monash University. She obtained her Bachelor’s in Biomedical Engineering with Honours from the University of Auckland. This was followed by several years of industry experience working for the Auckland-based start-up Soul Machines as a Conversation Engineer, developing dialogues and conversational emotional and behaviours for their artificially and emotionally intelligent Digital Humans. Her particular research interests lie in conversational AI, dialogue modelling, and human-machine interaction. |

|

|

2023-1-31 11:00~12:00 Hybrid @UT or online Interacting with Socially Interactive Agent Prof. Catherine Pelachaud, Sorbonne University, France Abstract: Our research work focuses on modeling Socially Interactive Agents, i.e. agents capable of interacting socially with human partners, of communicating verbally and non-verbally, of showing emotions but also of adapting their behaviors to favor the engagement of their partners during the interaction. As partner of an interaction, SIA should be able to adapt its multimodal behaviors and conversational strategies to optimize the engagement of its human interlocutors. We have also been working on endowing an agent to respond to social touch by a human and to touch the human to convey different intentions and emotions. We have developed models to equip these agents with these communicative and social abilities. In this talk, I will present the works we have been conducted. Biography: Catherine Pelachaud (CNRS-ISIR) is Director of Research in the laboratory ISIR, Sorbonne University. Her research interest includes socially interactive agent, nonverbal communication (face, gaze, gesture and touch), and adaptive mechanisms in interaction. With her research team, she has been developing an interactive virtual agent platform, Greta, that can display emotional and communicative behaviors. She has participated in the organization of international conferences such as IVA, ACII and AAMAS. She is and was associate editors of several journals among which IEEE Transactions on Affective Computing, ACM Transactions on Interactive Intelligent Systems and International Journal of Human-Computer Studies. She is co-editor of the ACM handbook on socially interactive agents (2021-22). |

|

2022-12-21 17:00~18:00 Hybrid @UT or online Sharing scientific sports training expertise by video motion capture, a time-series database and open source visualization tools Dr. Cesar Hernandez Reyes, the University of Tokyo, Japan Abstract: Sports and exercise are valuable habits. Having a training goal can improve a person's mental and physical health. Such training goals can be achieved more effectively with information technologies. For example, a person can set quantitative goals by visualizing their muscle forces and joint motions. However, obtaining this data is complex because motion capture (Mocap), force plates, EMG sensors, etc, are costly and require technical expertise to use. Furthermore, analyzing biomechanical data requires the knowledge of sports coaches, clinicians, etc. This talk will introduce ongoing research which aims to build a system by which scientific training can be accessible to the general public. This system combines markerless video Mocap and a time-series database platform optimized for managing and visualizing human musculoskeletal data. Finally, this talk will discuss ideas to identify the difference between how an expert athlete chooses their motions compared with a novice by using Inverse Reinforcement Learning. Biography: Cesar Hernandez Reyes is a Postdoctoral Researcher at the Human Motion Data Science lab, Graduate School of Engineering at the University of Tokyo since October 2021. He is currently working on the democratization of scientific sports training by developing technologies to bridge the expertise gap between engineers, sports scientists and general users. He obtained his Doctoral and Masters Degree in Systems and Control Engineering from the Tokyo Institute of Technology in 2021 and 2018, respectively. Also, he obtained his Bachelor degree in Mechatronics Engineering from Universidad de Monterrey in Mexico. His previous research experience includes the computational modeling of the olfactory search behavior of the silk moth and the fusion of probabilistic and bio-inspired olfactory search algorithms for application in mobile robots. |

|

2022-11-2 11:00~12:00 Hybrid @TUAT GVLab or online Modeling, design and control of soft robotics systems: a tour of Defrost team's research Dr. Quentin Peyron, INRIA, France Abstract: Soft robotics systems consist in flexible structures that are deformed with large displacements to produce motion and perform various tasks. Due to their intrinsic compliance, they are particularly suited for applications involving narrow and cluttered environments and physical interactions with humans. However, this compliance also induces unique challenges and open research questions: Their kinemato-static and dynamic modeling in real-time, the exploration of their large design space, and their control. The Defrost team of INRIA and CRIStAL in Lille has developed expertise in these three aspects. I will present our recent works on efficient finite element and Cosserat beam models for soft robots interacting with their surroundings, and the associated software developments through the simulation platform and consortium SOFA. I will then show some results on soft robot design, using evolutionary optimization algorithms and anisotropic meta-materials, as well as some of the prototypes developed by the team. Finally, I will present our progress on soft robot control using non-linear control theories and AI and will finish with perspectives for the years to come. Biography: Dr. Quentin Peyron is a research scientist at the INRIA institute, France, working with the Defrost team. After obtaining an engineering degree in mechatronics from INSA Strasbourg, and a master in robotics from the University of Strasbourg, he obtained a PhD in robotics from the University of Bourgogne Franche Comté. During his PhD, he worked in co-supervision with the ICube (Strasbourg) and FEMTO-ST (Besançon) laboratories on the modeling, analysis, and design of slender and thin continuum robots for minimally invasive surgery. He also collaborated with the MSRL team of ETH Zürich on magnetic continuum robots. He was a Postdoctoral Fellow at the Continuum Robotics Lab of the University of Toronto from 2020 to 2021, where he worked on tendon-driven continuum robots. During his Post-Doc, he received the Post-Doctoral Fellowship Award from the University of Toronto. He joined INRIA in January 2022. Dr. Peyron is a reviewer for IEEE RA-L and T-RO journals, as well as for Mechanisms and Machine Theory and Autonomous Robots. His research interests are the modeling, design, and control of continuum and soft robots, and the development of eco-designed soft robotics for industrial applications. |

|

2022-10-20 15:00~16:00 Hybrid @UT Eng. 2 room 232 or online A Career in Corporate Robotics Research Dr. Katsu Yamane, Path Robotics, USA Abstract: This talk is a personal reflection upon my career of the past 14 years working in robotics at corporate research labs of Disney, Honda, Bosch, and now Path Robotics. I will first present my thoughts on how the size, industry and culture of a company determine how its R&D department operates and how, in turn, your experience as a researcher is affected. I will then illustrate different roles of corporate research by showing examples of how projects are initiated, managed, and transferred (or shelved). Finally, I will introduce Path Robotics' technology and why I believe we can become a (rare) successful robotics company. Hopefully this talk will help students and young researchers choose their future careers in robotics, whether it be academia, industry, or entrepreneur. Biography: Dr. Katsu Yamane is currently a Principal Research Scientist at Path Robotics Inc., a late-stage startup specializing in autonomous welding technology. He has held research scientist positions at Disney Research, Pittsburgh, Honda Research Institute USA, and Bosch Research North America. Prior to moving to industry, he was a postdoctoral researcher at Carnegie Mellon University and a faculty member at the University of Tokyo where he also received his PhD in mechanical engineering in 2002. Dr. Yamane's research experience spans from manipulation planning and control to human motion analysis and biomechanics. He has also been active in the academic community as an editor and organizer of various journals and conferences as well as an author of over 100 peer-reviewed technical publications. |

|

2022-9-27 11:00~12:00 Hybrid @UT Eng. 2 room 233 or online Embodiment and Explainable Behaviors for Teaming Up with Human-Centered Robots Prof. Luis Sentis, U. Texas at Austin, USA Abstract: In this talk I will first delve into control architectures and embodiment for legged manipulators such as NASA's Valkyrie humanoid robot and other custom-built bipeds and humanoid robots. Emphasis will be placed on trajectory generation, dynamic walking and trajectory tracking using whole-body prioritized multi-level task control and model predictive control. I will then explain key ideas on training neural networks to learn the physical outcome of a commanded goal in terms of their physical success given the interaction environment. Predicting if a robot will encounter collisions, singularities, or balance problems at runtime is key to provide feedback to human operators as a means to explain the robot's physical behavior before task execution. We will show how this property can be used in combination with a cognitive architecture to have natural spoken interactions between human users and robots. In the next part of the talk, I will discuss teaming up with robots by employing imitation learning techniques and the use of thin-film epidermal electrodes for brain activity sensing. Finally, I will discuss our current efforts in human autonomy teaming in tasks such as indoor and outdoor object search with mixed teams of robots and humans. Biography: Luis Sentis is a professor at the University of Texas at Austin and executive member of Good Systems where he heads the Human-Centered Robotics Laboratory, focusing on embodiment, motion planning, and control of human-centered robots such as legged robots, humanoids and exoskeleton systems. More recently he leads and collaborates in new projects regarding multi-robot search in indoor and outdoor environments, perceptual legged navigation in dynamic and crowded environments, and the use of wearable brain sensors for human factor studies. In 2015 he co-founded Apptronik, a company building next generation humanoid avatars. |

|

2022-7-27 11:00~12:30JST Hybrid (contact us to join in person) Join online N. Zaidi (Strate, France) Impacts of SF imaginaries on robots design: low diversity of productions and how to better design through imaginaries? Abstract: The increasing development of digital technologies such as social robotics in the last decades has already shown some of its social limits. Many studies have focused on the negative impacts of the technocentric nature of the technological artefacts design whose meaning and usefulness are not obvious for the end users, and whose introduction into real-life ecosystems can disrupt pre-existing organizations. Participatory approaches have been developed to take greater account of the complexity of the real world and the diversity of stakeholders by involving them further into the design process. Although they allow to create products that are better adapted to the environmental and human constraints, these approaches nonetheless seem to carry a bias specific to new technologies: the existence for all stakeholders (roboticists, designers or end users) of strong imaginaries related to technological objects that mainly come from Science-Fiction. In a context of social, environmental and health crisis where dystopian fictions about technology become a norm, the question of the influence of imaginaries on the design of technologies arrises. How do these imaginaries impact the design process? Why do they seem particularly important in HRI and social robotics? Which design methods can be developed to take this bias into account in the design process, and even better design through those imaginaries? This talk aims to share a piece of reflection about this topic and to open a conversation on how to raise awareness about unconscious imaginaries in our research projects. Biography: Nawelle Zaidi is a PhD student in Design at the Projekt Lab of the University of Nîmes, France and at the Robotics By Design Lab of Strate School of Design, France. Her research focuses on the design of social robots for older people and caregivers in medical nursing facilities. She is conducting a practice-based research in a company of geriatric institutions in France. She previously received a Master degree in generalist engineering (spe. images and signal processing) from Ecole Centrale Marseille in 2016 and a Master degree in Interaction Design from Strate School of Design in 2018, and worked for a couple of years as a UX Designer in the industry. |

|

2022-6-23 15:00~16:30JST Hybrid (contact us to join in person) Prof. D. Lestel (Ecole Normale Superieure, France) Evolutionary Challenge of Animal Robots Abstract: Robots and AI are not just machines that make it possible to do new things but ontological artifacts that profoundly change what it means to be alive. Robots and AI are part of a special category of non-biological living agents to which we can also attach other artifacts such as dolls, puppets or fetishes. Many robots look like animals but they are non-biological animal “transpecies” that do not belong to any species and that profoundly transform the ecology of life on Earth. This talk, accessible to non-philosophers, will discuss some of the problems posed by these disruptive machines that engage us in a major ecological revolution that has nothing to do with global warming. Biography: Dominique Lestel teaches contemporary philosophy at the Ecole Normale Supérieure in Paris and is a tenured researcher of the Husserl Archives. He was a member of the French-Japanese Laboratory of Informatics at the University of Tokyo in 2013-2014, a Visiting Professor in the Department of Mechanical Systems Engineering (in the GVLab) of Tokyo University of Agriculture and Technology with a JSPS Fellowship (2017-2018) and a Berggruen Fellow at the Center of Advanced Studies in the Behavioral Sciences at Stanford University (2018-2019). His latest book, Machines Insurrectionnelles (Fayard, 2021) develops a post biological theory of living agents. |

|

2022-4-26 16:00~17:30JST Online Prof. B. Indurkhya (Jagiellonian University, Poland) Faking emotions and a therapeutic role for robots and chatbots: Ethics of using AI in psychotherapy In recent years, there has been a proliferation of social robots and chatbots that are designed so that users make an emotional attachment with them. Such robots and chatbots can also be used to provide psychotherapy. In this talk, we will start by presenting the first such chatbot, a program called Eliza designed by Joseph Weizenbaum in the mid 1960s. This program did not understand anything, but relied on keyword matches, and a few simple heuristics to keep the conversation flow and provide an illusion of understanding to the user. At that time, Weizenbaum was taken aback by the intensity of emotional attachment users felt towards this program, prompting him to highlight this negative aspect of technology in his thought provoking book "Computer Power and Human Reason". Biography: Bipin Indurkhya is a professor of Cognitive Science at the Jagiellonian University, Krakow, Poland. His main research interests are social robotics, usability engineering, affective computing and creativity. He received his Master’s degree in Electronics Engineering from the Philips International Institute, Eindhoven (The Netherlands) in 1981, and PhD in Computer Science from University of Massachusetts at Amherst in 1985. He has taught at various universities in the US, Japan, India, Germany and Poland; and has led national and international research projects with collaborations from companies like Xerox and Samsung. |

|

2022-3-14 11:00~12:30JST Online Prof. V. Hernandez (Surfclean, Japan) Adaptive virtual reality video game and machine learning Abstract: In this talk, I will present an overview of an industrial project I am leading as well as my ongoing research on human activity recognition at GVLAB. This project aims at developing adaptive video games in virtual reality. Virtual reality technology is an attractive complementary tool for rehabilitation and can make an important contribution to home rehabilitation. To maximize its effectiveness, the system is designed to provide an adaptive virtual environment based on the success or failure at achieving a specific movement goal. To this end, various machine learning algorithms are being investigated. Biography: Vincent Hernandez obtained his PhD in Human Movement Sciences in 2016 from the University of Toulon, France. In 2017, he was a postdoctoral researcher at Tokyo University of Agriculture and Technology (TUAT), Tokyo, Japan. Between 2018 and 2019, he was a postdoctoral researcher at the University of Waterloo, Ontario, Canada. He is currently an adjunct associate professor at TUAT as well as a project manager at SurfClean Inc, Sagamihara, Japan. His research interests are mostly focused on human activity recognition and adaptive virtual reality video game development. |

|

2022-1-27 10:30~12:00JST Online

Dr. M. JANG (ETRI, Korea) Introduction to Research Efforts on Robot AI for Elderly-Care Abstract: In this talk, I introduce research efforts and results from our project at ETRI on developing robot AI technologies for elderly-care, especially focusing on: 1) domain-specific AI targetting domains of home environments and elderly people, and 2) robot intelligence for automated communicative gesture generation. I hope to share our vision for developing robots that really help people in the real-world. Biography: Dr. Minsu JANG has been working at ETRI (Electronics and Telecommunications Research Institute) since 1999, and he received his PhD from KAIST in 2015. His research interest include social robots, human-robot interaction, robot SW integration, and artificial intelligence in general. |

|

2021-12-23 14:30~16:00JST Online Dr. D. Vincze (Chuo University, Japan) Etho-Robotics, plus Reinforcement Learning for behaviour models Abstract: One of the challenges in social robotics is creating a robot to be accepted by humans as a long-term companion. To keep up the interest on a long-term basis, a possible solution could be the construction of behaviour models for social robots based on animal behaviour. Studying animal behaviour is the main goal of Ethology, therefore by using the results of Ethology, behaviour models for robotics can be constructed. The novel field of Etho-robotics aims to create behaviour models based on ethological studies. The dog-human attachment behaviour has been transformed into a computational model using a fuzzy automaton control system incorporating sparse fuzzy rule-bases. Connecting this behaviour model to a real environment, where humans can interact with a physical robot is underway. Biography: Prof. David Vincze is currently a JSPS postdoctoral research fellow in the Human-System Laboratory (Niitsuma Lab.) at the Department of Precision Mechanics at Chuo University, Tokyo, Japan, on leave from the Department of Information Sciences at the University of Miskolc, Hungary, where he is an associate professor. Graduated in information engineering from the University of Miskolc, and later earned his PhD in 2014 in Computer Science focusing on machine learning and human-robot interaction. His research in machine learning examines fuzzy rule-based learning systems and algorithms for knowledge extraction in a form, which could be directly interpreted by humans. His research in HRI includes designing ethologically-inspired behaviour models implemented as fuzzy control systems. Also he has been contributing to the open source community, by implementing new ideas for Linux and UNIX based systems in data centers. |

|

2021-12-9 17:00~17:30JST Online Prof. L. Damiano (IULM University (Milan, Italy)) 'Understanding by building'. Genealogy, epistemology and relevance of the synthetic approach to the modeling of life and cognition Abstract: "Understanding by building" is the promise of the “synthetic method”, which supports the emerging sciences of the artificial in contributing to the scientific study of life and on cognition, lato sensu, based on the construction and the experimental exploration of “software”, “hardware” and “wetware” models of living and cognitive processes. This talk proposes a reconstruction of the genealogy and the epistemology of reference of the synthetic method with two main goals: defining the novelties that this methodological approach proposes with regard to the traditional way of 'doing science', and addressing the controversial issue of its relevance for the scientific understanding of natural living and cognitive phenomena.